In my career, I’ve run across a lot of terrible installers in a variety of forms. The one I ran across today though is noteworthy enough that I want to point it out because of the following reasons:

- It’s an installer application. I have opinions on those.

- It’s for a security product where, as part of the installation, you need to provide the username and password for an account on the Mac which has:

- Administrator privileges

- Secure Token

Note: I have no interest in talking to the vendor’s legal department, so I will not be identifying the vendor or product by name in this post. Instead, I will refer to the product and vendor in this post as “ComputerBoat” and leave discovery of the company’s identity to interested researchers.

For more details, please see below the jump.

To install ComputerBoat, you will need the following:

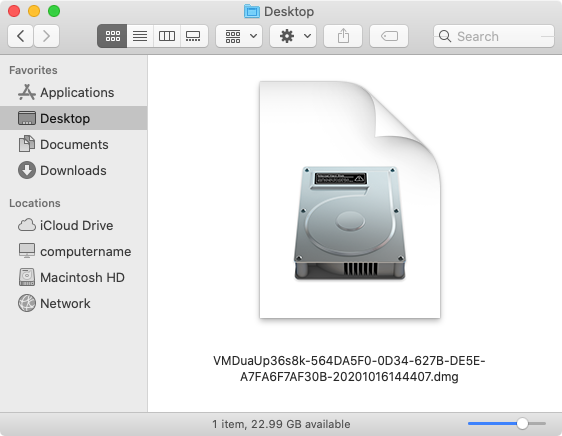

- The ComputerBoat installer application.

- A configuration file for the ComputerBoat software.

- The username and password of an account with the following characteristics:

- Administrator privileges

- Secure Token

Once you have those, you can run the following command to install ComputerBoat:

Why it necessary to provide the username and password of an account with admin and secure token? So this product can set up its own account with admin privileges and a Secure Token!

Why is it necessary for this product to set up its own account with admin privileges and a Secure Token? I have no idea. Even if it is absolutely necessary for that service account to exist, there is no sane reason why an application’s service account needs a Secure Token. In my opinion, there are only three reasons why a service account may need to have Secure Token.

- To add the service account to the list of FileVault-enabled users.

- To enable the service account to create other accounts which have Secure Tokens themselves.

- To enable the service account to rotate FileVault recovery keys.

All of those reasons have serious security implications. Even more serious security implications are brought up by the fact that this vendor thought requesting the username and password of an account with admin and secure token was an acceptable part of an installation workflow. To further illustrate this, here’s a sample script which the vendor provided for installation using Jamf Pro:

Here, the installation workflow is as follows:

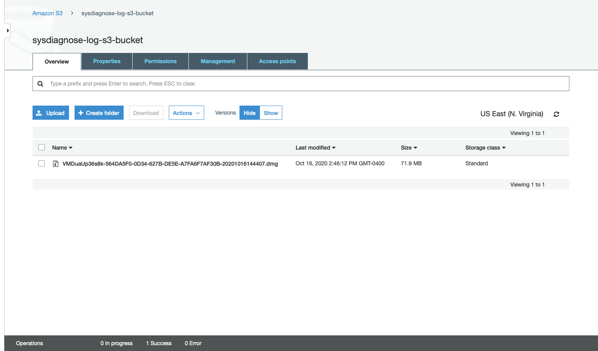

- Use curl to download a compressed copy of the ComputerBoat installer’s configuration file in .zip format.

- Use ditto to unzip the dowloaded configuration file into a defined location on your Mac.

- Use ditto to unzip the downloaded installer into the same defined location on this Mac.

- Run a directory listing of the defined location.

- Remove extended attributes from the uncompressed ComputerBoat installer application.

- Using credentials for an admin account with Secure Token, install the ComputerBoat software and set up a new account with a Secure Token to act as the application’s service account.

- Checks to see if it can run a command to get the newly-installed application’s version number.

- A. If the version number comes back, the install succeeded.

- B. If nothing comes back, the installation is reported as having failed.

The only defense I can think of for the vendor is that it says “Sample” in the description. That may imply that the vendor built this as a proof of concept and may be trying to subtly encourage their customer base to develop better solutions for deploying the ComputerBoat software on Macs. On the other hand, I received this script on the customer end of the transaction. That meant that someone at the vendor thought this was good enough to give to a customer. Either way, it’s not a good look.

Why is this script problematic?

Security problems

- You need to supply the username and password on an account on the Mac with admin privileges and Secure Token using a method that other processes on the computer can read. This leaves open the possibility that a malicious process will see and steal that username and password for its own ends.

- The script is set to run in debug mode, thanks to the set -x setting near the top of the script. While this may be helpful in figuring out why the installation process isn’t working, the verbose output provided by debug mode will include the username and password of the account on the Mac with admin privileges and Secure Token.

Installation problems

1. Without supplying the username and password on an account on the Mac with admin privileges and Secure Token, the installation process does not work.

If you’re deploying this security application to your fleet of Macs, that means that the vendor has made the following assumptions:

- You have an account with admin privileges and Secure Token on all of your Macs which share the same username and password.

- You’re OK with providing these credentials in plaintext, either embedded in the script or provided by a Jamf Pro script parameter in a policy.

2. Without providing a separate server to host the ComputerBoat installer’s configuration file, the installation process does not work.

- If you’re deploying this software, the vendor apparently did not think of using Jamf Pro itself as the delivery mechanism for this configuration file. Hopefully you’ve got a separate web server or online file service which allows for anonymous downloading of a file via curl.

3. Without figuring out a way to get the installer into the same location as the downloaded configuration file, the installation process does not work.

- Overlooked by the installation script is this question: How does the installer get to the location defined in the script as $COMPUTERBOATEPM_INSTALL_TMP ? The script assumes it’ll be there without including any actions to get it there or checking that it is there.

There are further issues with this script, but they fall into the category of quirks rather than actual problems. For example, I can’t figure out the purpose of the following lines:

ls -al $COMPUTERBOATEPM_INSTALL_TMP xattr -d $COMPUTERBOATEPM_INSTALL_TMP/Install\ ComputerBoat\ EPM.app

Neither command seems to do something useful. The first one will list the contents of the directory with the configuration file and the installer application, but then that information isn’t captured or used anywhere. The second removes extended attributes from the ComputerBoat installer application, but the reason for this removal isn’t explained in any way.

You can draw conclusions about a vendor and their product quality by looking at how that vendor makes it possible to install their product. In examining this installation process, especially considering this is for a product intended to improve security in some way, I have drawn the following conclusions:

- The vendor has not invested resources in building macOS development or deployment expertise.

- The vendor is unwilling or unable to avoid compromising your security with their product’s installation process.

- The vendor is not serious about developing or maintaining a quality product for macOS.

When you see installation practices like this, I recommend that you draw your own conclusions on whether this is a vendor or a product you should be using.